As society

increasingly gets dependable on the internet and technologies, it is crucial to

recognize that terrorist organizations are also following the shift.

Organizations tend

to adopt technology earlier, by exploiting emerging tools and platforms to

further their nefarious agenda.

Generative AI, also known as GenAI, is a branch of Artificial Intelligence (AI) that can generate diverse forms of data, including but not limited to photos, videos, audio, text, and 3D models.

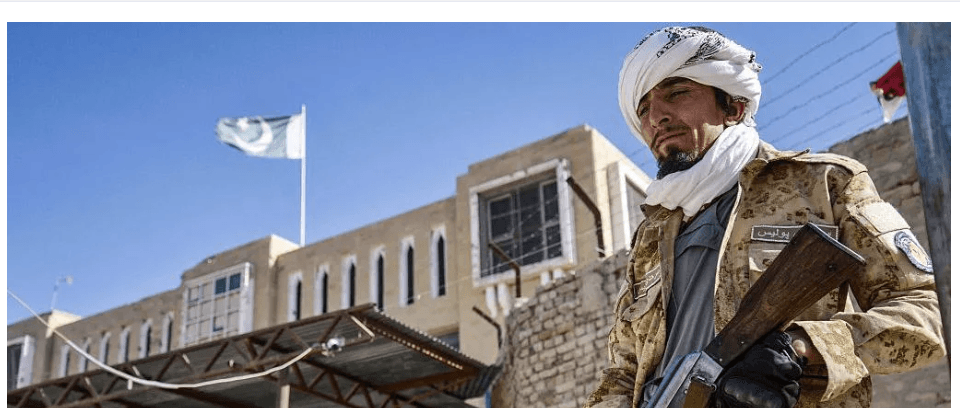

Extremist factions

have initiated trials using artificial intelligence, namely generative AI, to

generate a deluge of fresh propaganda.

There is concern

among experts that the increasing use of generative AI techniques by these

organizations may undermine the efforts made by Big Tech in recent years to

prevent their information from being accessed online.

Terrorist

organizations have commenced using AI-powered tools to create propaganda

content.

Recently, Islamic

States Terrorist support groups ran a social media ruse on Facebook and YouTube

posing popular English and Arabic language televisions using AI-powered

voice-overs to deliver ideological and operational updates as news to their

followers.

Deepfakes, an output of the convergence of artificial intelligence manipulation techniques have garnered significant attention due to their profound implications.

Deep Fakes may be

used in radicalization by capitalizing on the susceptibilities of individuals'

emotions and ideologies.

Adversarial

entities can alter recordings to fabricate endorsements of extremist ideology

by prominent individuals, disseminate false testimonies to support extreme

ideas, and propagate propaganda that glorifies violence and incites acts of

terrorism.

Also, hostile

groups have utilized AI-powered tools to disseminate extremist content.

Terrorist organizations use generative AI for a range of purposes that go beyond just manipulating images.

One of the

strategies used is the utilization of auto-translation systems to efficiently

translate propaganda into many languages, as well as the generation of

personalized messages on a large scale to enhance online recruiting efforts.

AI-enabled

communication platforms, mainly chat applications, have the potential to be

powerful tools for terrorists aiming to radicalize and recruit

individuals.

The proliferation

and rapid integration of advanced deep-learning models, shown by generative

artificial intelligence, have raised apprehensions over the potential use of

these AI tools by terrorists and violent extremists to augment their activities

both in virtual and physical realms.

The threat of malicious generative AI use can be grouped into three categories: digital, physical, and political security.

Extremist groups have been engaging in the production of Neo-Nazi video games, pro-Isis posts on TikTok, and music that promotes white supremacy.

Generative AI can efficiently optimize these procedures and enable swift modification of preexisting music and films into realistic versions that are packed with hatred.

The present condition of artificial intelligence validates the rapid expansion of generative AI.

According to Tech

Against Terrorism with the advent of the Large Language Model, usage of

LLM-enabled and edited content by terrorist groups could evade and defeat the

existing content moderation technology such as hashing.

In the latest

annual McKinsey Global survey organizations have embedded AI capabilities to

explore Gen AI’s potential, and few firms are fully prepared for the widespread

GenAI risks.

Technology

corporations have implemented measures to prohibit the creation of highly

controversial information.

According to the

Organization for Economic Cooperation and Development, there are over 1000 AI

policy legislation and policy initiatives from 69 countries, territories, and

the European Union (EU).

These efforts

include the development of comprehensive legislation, focusing on specific uses

of cases, national AI strategies, and voluntary guidelines and standards.

However, with all these policy initiatives, certain actors possess the ability to modify the dataset used in AI generative models to selectively create and distribute damaging material which still poses risks of adversarial exploitation.

Rule

of the road

Every nation is struggling to keep up with the constantly shifting landscape of online terrorist attacks.

Most nations are struggling with regulation to counter the threats, but the regulation is aimed at managing the risk posed by social media platforms rather than artificial intelligence.

A total ban on artificial Intelligence is impossible as AI is developed by the commercial sector rather than the government.

To tackle the overwhelming impact of Generative AI and terrorism and hostile groups, there should be a measure in place by governments, private sectors, technology companies, and individuals.

First, governments should devote significant resources to evaluating advanced AI technologies as a way of containing risks.

Secondly, governments and private sectors should develop generative AI tools to detect terrorist content based on a semantic understanding of content.

Thirdly, educating the masses on the threat of generative AI. This can be done through the development of artificial intelligence and ethics curricula.

To tackle the difficulties presented using generative AI for disseminating extremist propaganda, both AI and social media businesses must undertake a complete range of measures.

To successfully reduce the dangers, it is necessary to go beyond basic deterrents such as watermarks and use more advanced tactics.

It is important to

closely observe how bad individuals may bypass protective measures or use AI

technologies to enhance influence tactics.

AI companies are currently implementing proactive measures, such as suspending accounts involved in malicious activities, imposing restrictions on specific prompts that contribute to the creation of harmful content, and issuing warnings to users regarding potential misuse, through their investments in advanced surveillance of their platforms.

Nevertheless, there exists a plethora of other actions that may be undertaken, such as collaborating with social media corporations to orchestrate cross-sector hashing initiatives including artificial intelligence firms, social media platforms, and even intelligence personnel.

These proposed

exercises should aim to replicate authentic exploits, facilitating

deliberations on vulnerabilities, defensive strategies, and the enhancement of

countermeasures within a regulated setting.

Enhanced collaboration across the corporate and governmental sectors, academia, high-tech, and the security community would heighten recognition of the possible misuse of AI-driven platforms by belligerent radicals, hence promoting the creation of more advanced safeguards and countermeasures.

Through participating in these cooperative endeavours, which unite specialized knowledge, those involved may cultivate a thorough comprehension of possible AI risks and construct strong, unified safeguards.