How technology is transforming daily life in Kenya

Mobile technology is the major driving force behind Kenya’s digital revolution.

In practice, the AI can generate a video from as few as three input images.

In Summary

China's generative AI tools are carving out a unique niche, offering a blend of entertainment and practical benefits, while also playing a key role in preserving cultural heritage.

Among them, an image-to-video tool called Vidu-1.5, launched last week by a Beijing-based AI startup, has been proclaimed a multimodal model to support multi-entity consistency.

In practice, this means the AI can generate a video from as few as three input images.

For example, in a video shared by the company, the inputs -- "A man, a futuristic mecha suit, and a bustling cityscape at night" -- are seamlessly blended into a cohesive montage, all within just 30 seconds.

Understanding and controlling multiple entities -- such as the person, attire and environment -- has always been the biggest challenge in AI-generated video technology.

Ever since ChatGPT introduced its pioneering Sora, multiple Chinese tech firms have swiftly stepped up to the plate, rolling out offerings that boast unique characteristics. ShengShu Technology's Vidu is one popular example.

"Look how consistent is that suit," Stefano Rivera, an AI product aficionado wowed with admiration in a tweet, calling himself a "super-fan" of Vidu "from day 1."

This AI-generated content (AIGC) tool has already ignited a surge of creative enthusiasm among global individual creators, leading to playful and imaginative clips like Leonardo DiCaprio showcasing haute couture on the runway, Elon Musk cruising on an electric scooter in a flamboyant Chinese jacket, and a series of Japanese anime scenes.

Vidu's greatest breakthrough is establishing logical relationships among multiple user-specified objects within a scene, said Tang Jiayu, the CEO of Shengshu Technology, in a written response to Xinhua.

With previous text-to-video tools, generating scenes like "a boy holding the cake in a crystal setting" would yield different images of the boy, cake, and crystal each time, much like opening a blind box.

Now, with multi-subject consistency, the identities of the boy, cake and crystal can be preserved throughout the video, maintaining true-to-nature continuity, said Tang.

Chinese entrepreneurs like Tang, along with global investors with substantial capital, are rapidly pouring into the AIGC sector, expanding their market footprint in China.

In August, Zhipu AI launched its large video generation model product Ying.

This month, Kuaishou, a leading video platform in China, rolled out its KLING AI app on the Apple and Android stores, featuring a continuation writing that allows users to extend their generated videos by up to approximately three minutes.

Last week, Forbes China's top 50 innovative companies list featured eight large-scale model companies, which constituted the highest proportion among the selected firms.

China has filed and launched more than 180 AI generative content models that can provide services to the public, said an official from the Cyberspace Administration of China in August.

Out of more than 1,300 AI large language models (LLMs) globally, China accounts for more than 30 per cent, making it the second-largest contributor after the United States, according to a white paper on the global digital economy released in July by the China Academy of Information and Communications Technology.

Generative AI is set to add an estimated 7 trillion U.S. dollars to the global economy, with China expected to contribute nearly a third of this amount, accounting for approximately 2 trillion dollars, as shown by a Mckinsey report.

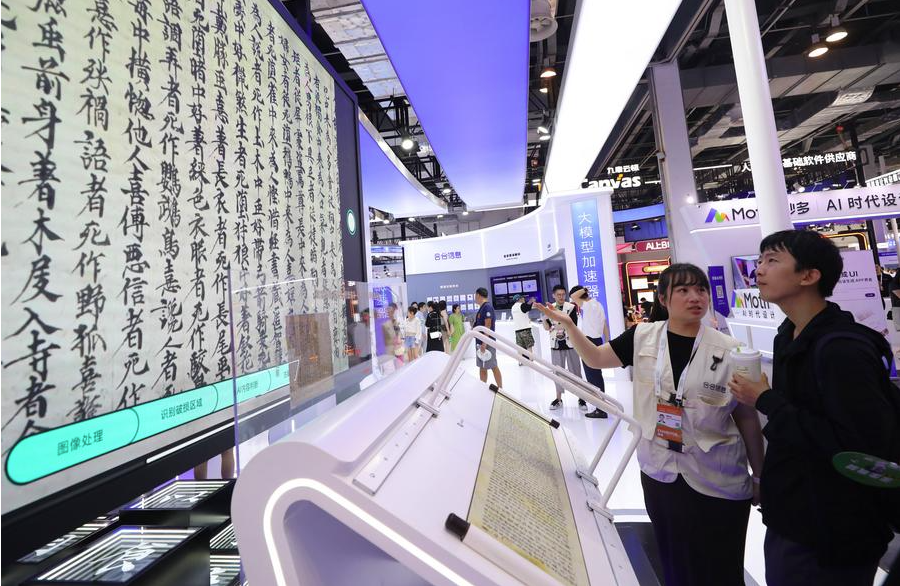

AI FOR CULTURAL PROTECTION

Beyond facilitating entertainment creation for online users, AIGC tools are being increasingly applied across diverse scenarios in China.

The preservation and promotion of cultural heritage is one of them.

A home-grown generative AI tool coded Jimeng, developed by ByteDance, has been employed to craft a fully AI-generated sci-fi short drama aimed at promoting the ancient Chinese culture, the first of its kind in the country.

"Sanxingdui: Future Apocalypse," published in July, follows a near-future narrative in which protagonists venture into a digitally reconstructed ancient Shu kingdom dating back over 3,000 years to avert an impending civilisation crisis.

The 12-episode series employed multiple generative technologies, including AI script-writing, concept and storyboard design, image-to-video conversion, video editing and media content enhancement.

Leveraging its proprietary multimodal large-scale model, ShengShu's AI engineers analysed extensive collections of ancient mural data from the Yongle Palace, the largest Taoist temple in China.

The 800-year-old temple's murals are beset by problems like colour fading, dust cover and deterioration.

Yet, their grand scale, distinctive style and rich intricacy significantly complicated the restoration efforts.

The engineers have trained the AI with Chinese mural art data, allowing it to comprehend and replicate the distinctive style of those murals, from colour to brush technique.

This enabled automated restoration tasks like digital colouring and filling in missing details, and the AI can mimic the brushwork of mural painters to redraw the facial features of deities in the murals, said Tang.

Mobile technology is the major driving force behind Kenya’s digital revolution.